v1.4.1 Now Available

SEO for AI Agents

Stop ChatGPT from hallucinating your library's API. Generate llms.txt context files automatically from your Docusaurus build.

npm install docusaurus-plugin-llms-txt Usage

Add the plugin to your docusaurus.config.ts (or .js):

import type { Config

} from '@docusaurus/types';

import llmsTxt from 'docusaurus-plugin-llms-txt'; // Import the plugin

const config: Config = {

// ...

plugins: [

llmsTxt, // Add it to your plugins array

],

// ...

};

export default config;

import llmsTxt from 'docusaurus-plugin-llms-txt'; // Import the plugin

const config: Config = {

// ...

plugins: [

llmsTxt, // Add it to your plugins array

],

// ...

};

export default config;

From this (Docs)...

# Introduction

Welcome to the API...

## Authentication

Use the `X-API-Key` header...

Welcome to the API...

## Authentication

Use the `X-API-Key` header...

To this (LLM Context)...

// build/llms-full.txt

## Authentication

Source: /docs/auth

Use the X-API-Key header...

## Authentication

Source: /docs/auth

Use the X-API-Key header...

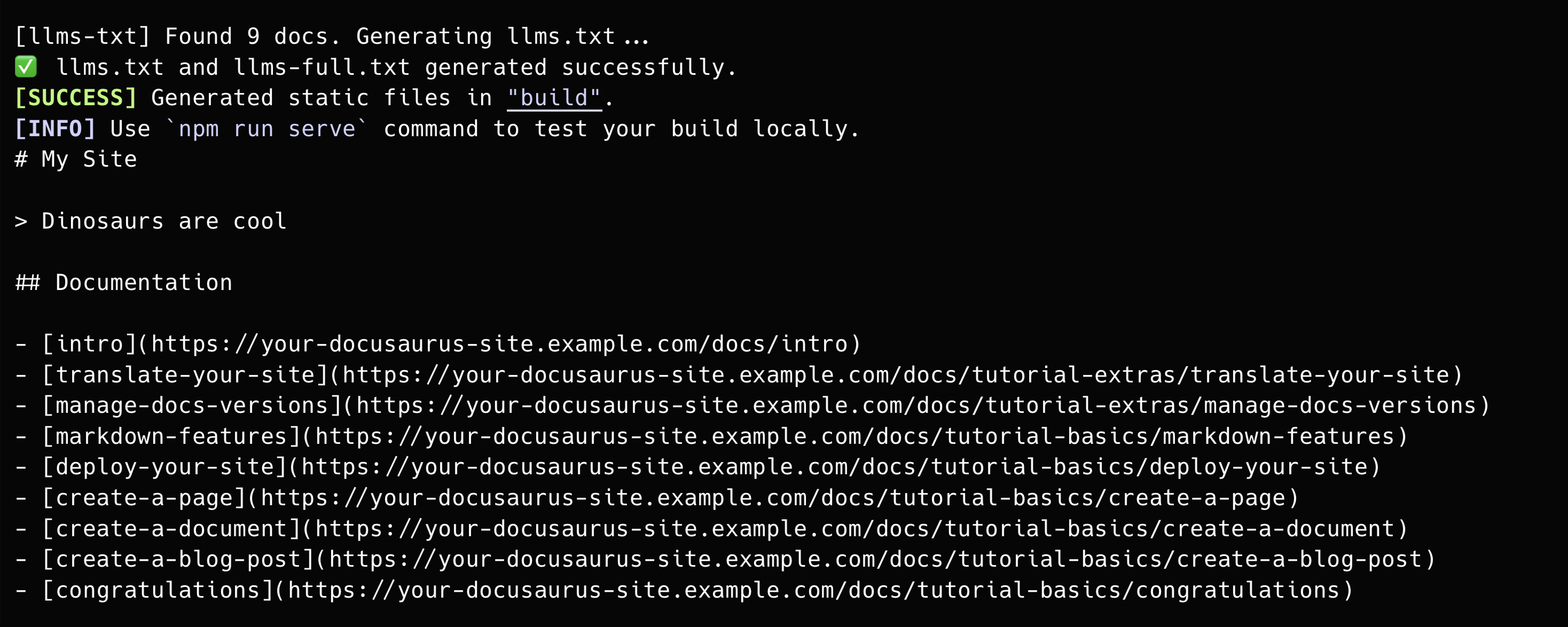

See it in Action

Readability

Generates a clean `llms.txt` index that helps AI agents discover your pages.

Full Context

Creates a `llms-full.txt` corpus for RAG pipelines and local LLM ingestion.

Zero Config

Works out of the box. Just add the plugin to your config and build.

Coming Soon in v1.6.0

Pro Features

Advanced capabilities for power users and enterprise documentation.

Advanced Filtering

- Config Exclusion: Ignore files

via glob patterns (e.g.

['**/secret/**']). - Frontmatter: Add

llms: falseto exclude specific pages.

JSONL Support

Generate llms.jsonl alongside your text corpus.

Standard format for fine-tuning OpenAI & Anthropic models, making your docs "fine-tune ready" instantly.

Token Optimization

Auto-cleans content to reduce "junk" tokens:

- Removes Docusaurus imports

- Strips HTML comments

- Collapses excessive newlines